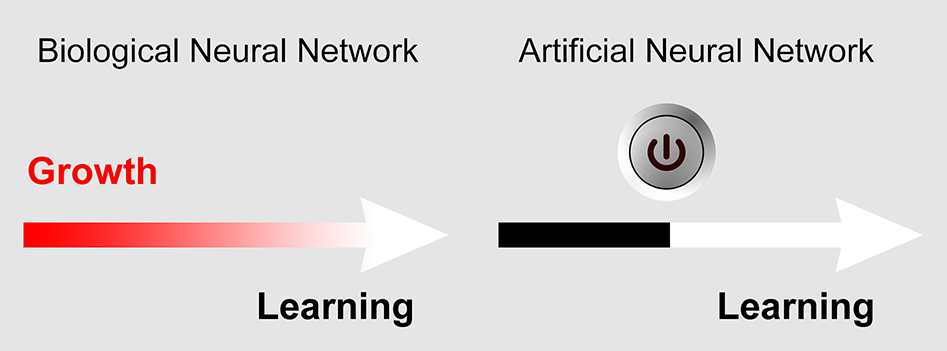

The information content of the brain, i.e. the size of a hard drive it would take for lossless up- and

downloading of information stored in biological neural networks of any size, is unknown. By

contrast, the information content of artificial neural networks that underlie virtually all

contemporary artificial intelligence, is well defined and can be saved on a hard drive in a precise

number of bits. Why has such a number never made sense for biological neural networks? The

number of bits is not simply unknown: just what should be counted or quantified is unknown, too:

a true unknown unknown. In this proposal, we offer two approaches to quantitatively assess the

information content of brain wiring using information theoretical approaches and the Drosophila

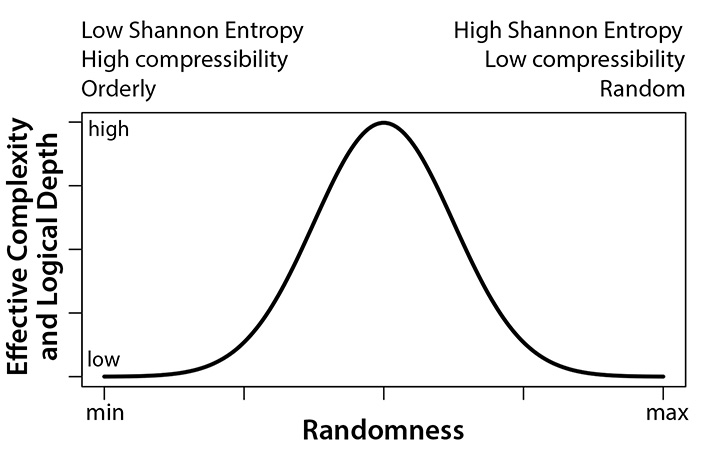

brain as a model. First, the assessment of ‘lower boundaries’ of information content based on

quantitative levels of description using Shannon entropy; these levels of description include the

newest connectome data as well as live dynamics measurements from our lab. Second, to

provide an assessment of compressibility, including approximations of logical depth based on

both lossless compression algorithms and lossy decompression of genome-driven developmental

transformations in the fly brain based on live imaging data. Together, these approaches are

devised to provide a quantitative, information theory-based bridge between neurobiology and

artificial intelligence with relevance for both fields and the public debate.

Information encoding in biological and artificial neural networks

Something to chew on: Shannon Entropy, Compressibility,

Randomness, Effective Complexity and Logical Depth

More information in the press, July 16,2025 here.