You can describe a digital image by describing the coordinate and color of every individual pixel, meaning each of the smallest component ‘dots’ that together make up the picture. Since pictures taken with modern cell phones and cameras have an ever increasing resolution, this leads to rather large image files to save and send to friends. Fortunately, most images can easily be compressed to reduce file size. For example, a uniformly blue sky that covers half of the entire picture can be described by the simple instruction: ‘paint every single pixel in the top half blue’. That’s substantially less information than the description of millions of individual pixels with coordinates and color values. Because they are so useful, image compression algorithms have become more and more sophisticated, some being lossless, some discarding some image information that seems least important. But all image compression algorithms have one thing in common: it takes time and energy to compress the original and it again takes time and energy to decompress the compressed code and thereby reproduce the picture. When you receive a compressed picture on your cell phone, the computer processor in your phone must run a decompression algorithm before you can see the picture. For any slightly advanced compression algorithm, you would not see anything like the final picture by looking at the compressed code.

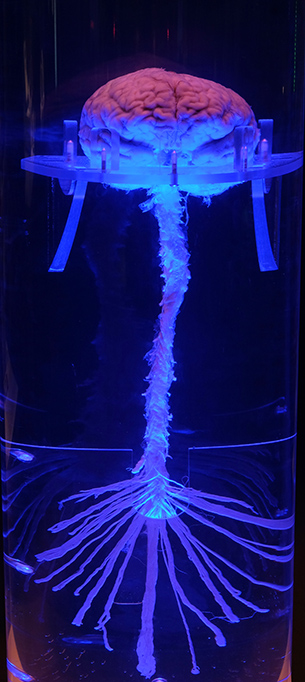

The genome is a compressed code that requires time and energy to decompress through a developmental process. You need substantially more information to describe a brain wiring diagram, than you need to describe the base pair sequence in the DNA that is sufficient to grow that brain wiring diagram. The more the code is compressed and the more iterations (repeated applications) of the decoding algorithms are required to decompress it (more time and energy), the less the compressed code will reveal about the final outcome.

Can you read in the compressed genomic code what the uncompressed output may look like without investing the time and energy to decompress it through a lengthy, time- and energy-consuming process?

Biologists do, in fact, routinely perform targeted genetic manipulations with (relatively) predictable outcomes. However, these predictions are generally based on previous analyses of similar outcomes. Could we predict developmental outcomes of genetically encoded processes if we had no previous examples?

While biologists may find it difficult to answer this question, a field of mathematics concerned with cellular automata provides an answer in the shape of some of the simplest imaginable codes that yet can produce infinite complexity with infinite iterations. The output of a simple code (consisting of an initial state and some very simple rules) after, say, 250 iterations can only be found out by one method: running the 250 iterations one by one, growing it, decompressing it, with no shortcut. For cellular automata like rule 110 or the beloved Conway’s Game of Life we know that the outcome of many repeated applications of their very simple underlying rule sets is not predictable in any other way.

In algorithmic information theory, the question: ‘how much can the picture be compressed?’ is used to define complexity. A highly compressible picture (e.g. uniformly blue) is very ordered, can be described by a very short compressed code, requires little information to describe, and the picture is not complex in algorithmic terms. A picture in which every single pixel has a random color is (almost) non-compressible and very unordered, it requires a lot of information to describe, and the picture is complex in algorithmic terms.

If a comparably small genome can encode an apple tree or a brain wiring diagram that requires comparably more information to describe, then we are dealing with a compressed code for an ordered outcome. Does that mean the brain is not complex after all? The key to approach this question is the realization how much time and energy it takes to decompress the genomic code. I call this process algorithmic growth in The Self-Assembling Brain. Energy is information. The compression of the genome stores a lot of information, assembled by evolutionary processes that took a lot of time and energy. The decompression of this information takes, in the case of the human brain, years and lots of breakfast cereals. It’s just that you could not have read it any other way. There is no shortcut. This is the hypothesis of The Self-Assembling Brain.